Fortune a Day - Recap - Week 7

14 Feb 2025 Reading time: 30 secondsHere’s my week 7 recap of my Fortune A Day project!

To stay on top of these daily, follow Fortune a Day on Bluesky!

Here’s my week 7 recap of my Fortune A Day project!

To stay on top of these daily, follow Fortune a Day on Bluesky!

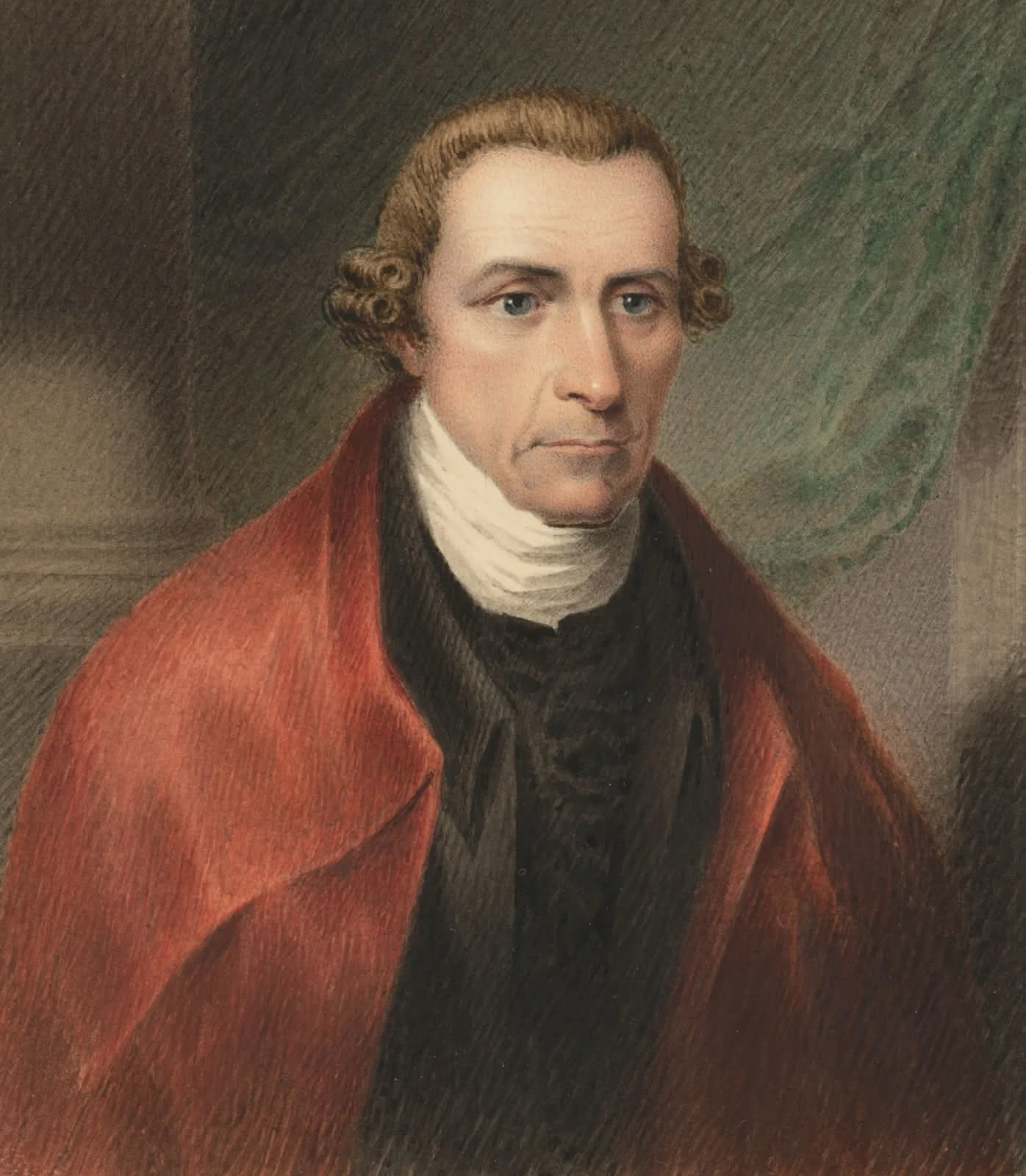

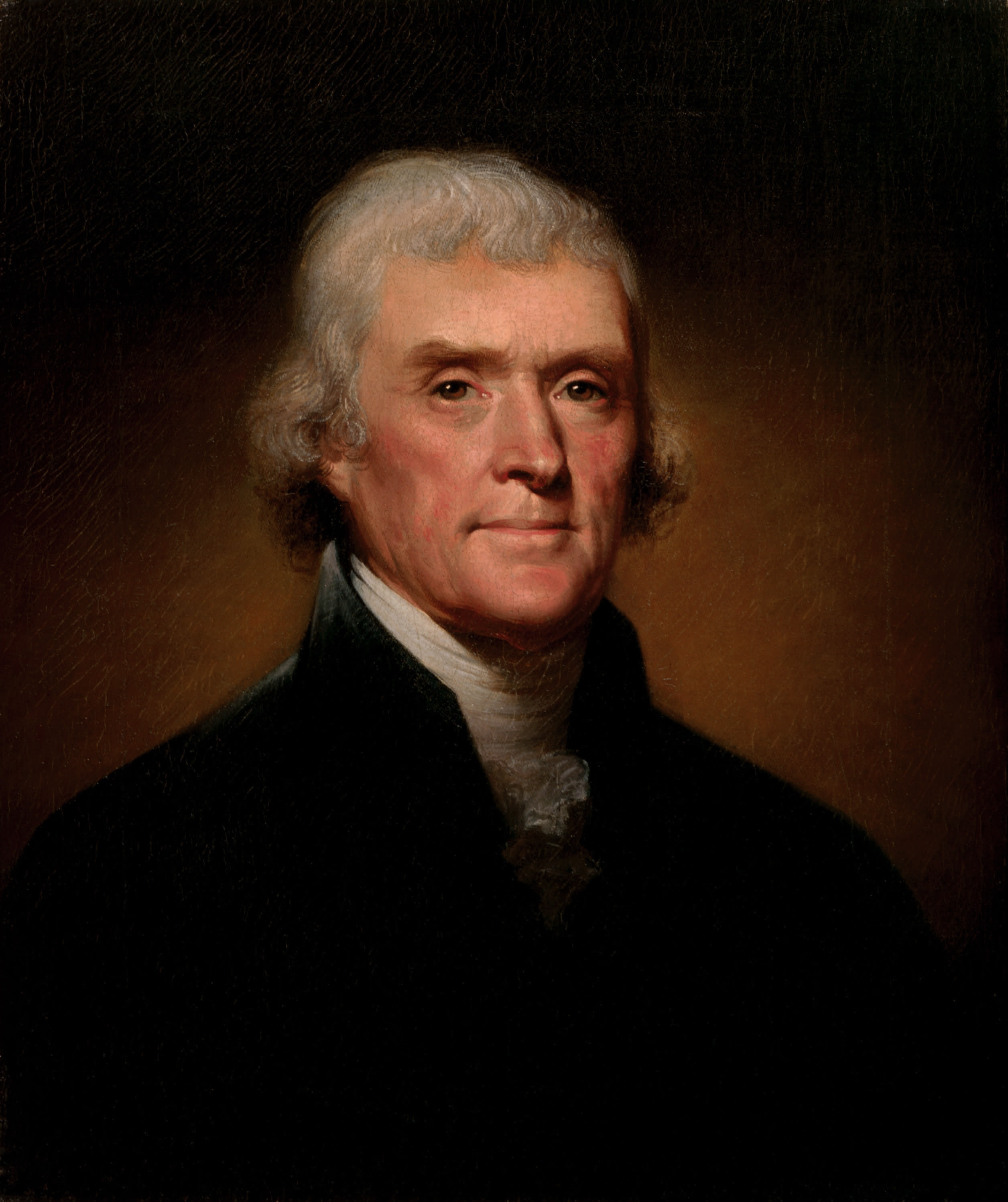

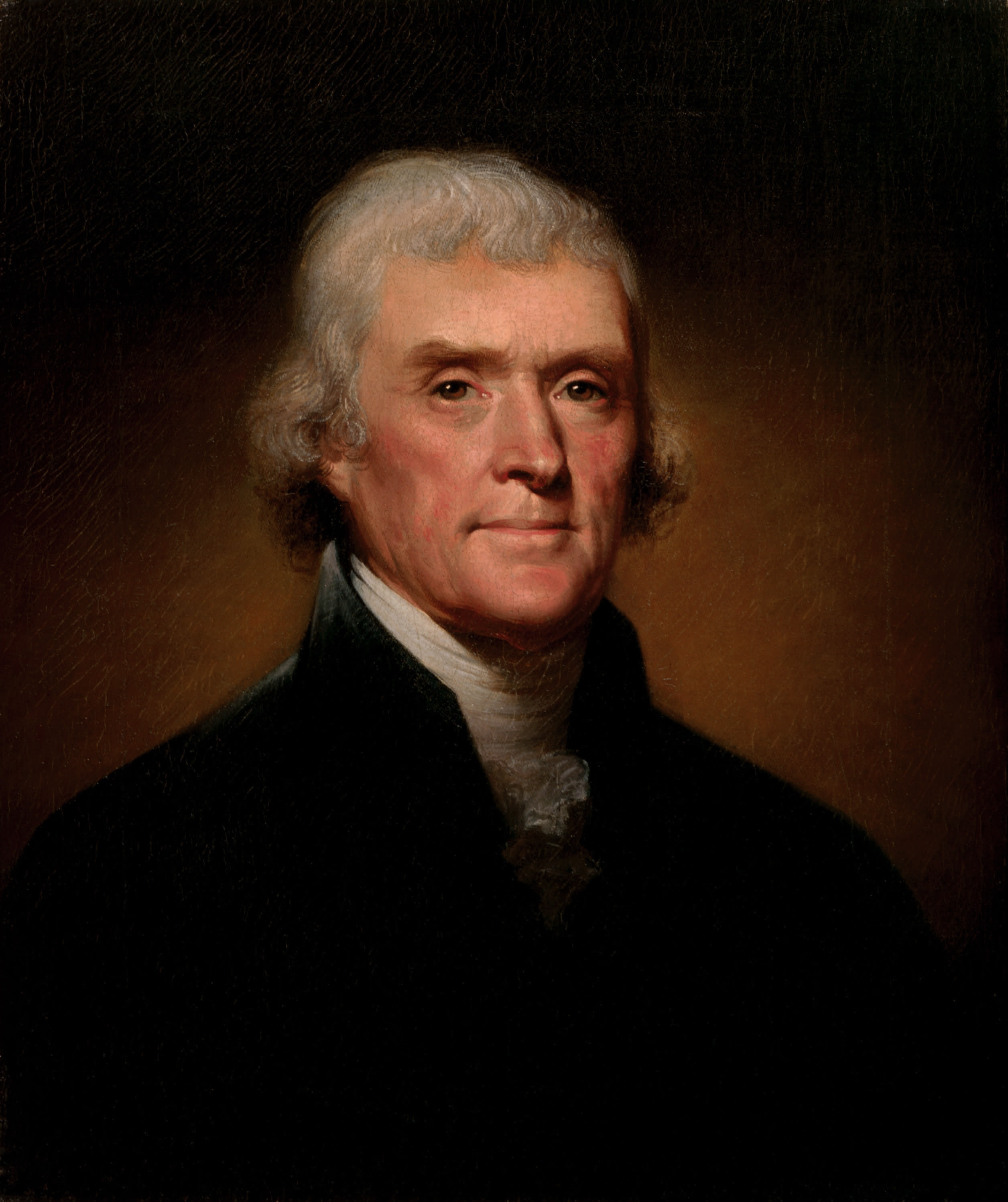

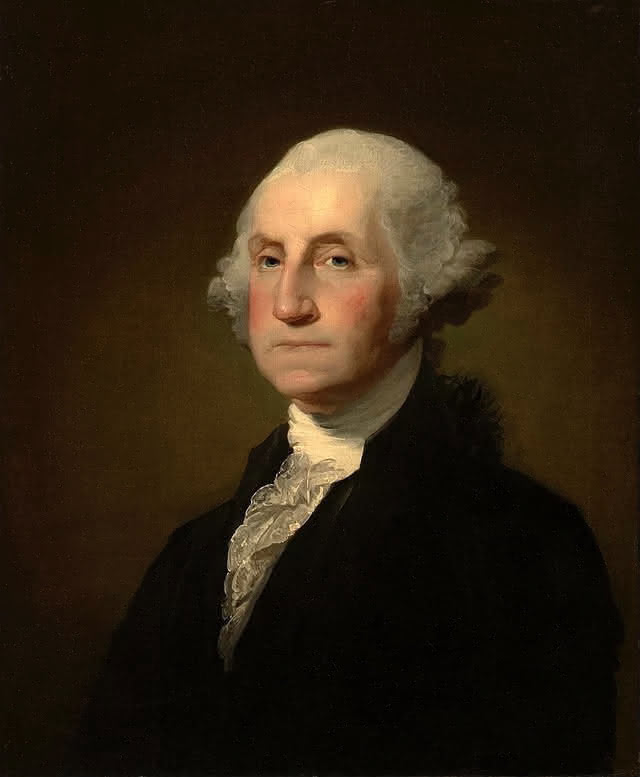

Source. License. Modified by cropping and compressing.

Source. License. Modified by cropping and compressing.

There’s a lot of misinformation going around about USAID - what it is, and fabricated false claims of fraud. I do not really want to get into all of that here - but if you are skeptical - please do some searching around online, such claims have been debunked. A simple search such as USAID misinformation will get you a bunch of information, from there you can read and see for yourself.

Theres a few articles I want to highlight on the consequences of potentially removing USAID and topics related to it. All of them have unique aspects - one thing remains across all of them; USAID helps the world and its people - yes - but it also helps America, and Americans in a significant manner.

Click on each of the titles in the bulleted list below to go to the source articles. I highly recommend reading through them all, but ill provide quotes and stuff here too.

Adam Kinzinger is a former (Republican) congressman from the great state of Illinois - wrote this article which outlines his personal experience as well as why USAID is so important.

Quotes:

“As a member of Congress, I visited USAID programs in many countries including, among others, Pakistan, Kenya, Ethiopia, and Egypt. I saw how our aid literally saves lives and builds local economies. I also saw that along the way, USAID fulfills its secondary mission of promoting democracy and free markets. (Do you hear me conservatives? USAID promotes capitalism.) USAID also buys billions of dollars’ worth of wheat, rice, and soybeans from American producers”

“You could scour USAID’s projects and find other examples of waste and other scandals like the poppy-grower debacle. This is true in the case of every bureaucracy and is why it makes sense to consistently audit the agency’s activities which, by the way, is already being done. But if you want to have a special inquiry into USAID, I say, “Bring it on.” If there are ways to make it more efficient, to force greater focus on its mission, to reform its operations, let’s do it.”

Like I said above, it’s worth a read - and it is not too long - the personal experience with the agency is interesting as well.

“A 71-year-old woman has died after her oxygen supply was cut off when the United States announced a freeze on aid funding.”

People are dying already, within days, of this fiasco. Do read the full article - theres a bunch of additional information regarding both short term and long term effects, and a lot of other information to understand the who/what/how this aid the richest country in the history of the world, provides.

“President Donald Trump’s disruption of the U.S. Agency for International Development threatens to harm both poor people abroad and farmers at home.”

“While the U.S. is the world’s largest food donor overall, it ranks “way lower” than most European countries in donations per capita, said Hertzler, who grew up on a farm in Northumberland County, Pennsylvania.”

“That could cause problems for the $2 billion USAID spends annually buying food from U.S. farmers for relief efforts.”

What is interesting about this is that not only does this food feed people, much of who would starve to death otherwise, it also provides a 2 billion dollar subsidy for farmers in the U.S.A.. You remove this and many, many farms will go under and that is obviously a massive issue. Not only is this economically painful, it is also a national security concern - food is one of the few things that we absolutely have to have significant capacity to produce internally in case of war, famine, or external withholding from other countries.

Interesting how this was the first agency targeted. Interesting. Do some research into this 🍐😀.

I’d encourage everyone to do some research into USAID in additional to reading these articles above.

“How easy it is to make people believe a lie, and how hard it is to undo that work again!”

Hard, yes; impossible…. no.

The main issue we face as a pro-democracy and pro-American movement - is the extreme amount of disinformation being pushed by multiple adversaries - whether it’s foreign governments, self interested billionaires, or corrupted government officials.

Many, many, many Americans are lost in this cycle - consuming almost only media that is compromised in the above ways. These misinformation machines get tens of millions (or more) eyeballs - how can we compete and restore logic to America?

Each of us individually do not have the reach, but together we can make a massive difference – really! Together and engaged, we outnumber those who wish to spread misinformation.

By the way, if you do not have the energy to do these things - there is no judgement. Take care of yourself first and foremost!

Here’s my quick gameplan for individuals:

Some great resources you can use to post and form your arguments:

If we all do even some of these things, we will start to pop the misinformation bubble with logic and facts.

This is the third recap of my new mini project, where I post a quote by a Founding Father each day to this Bluesky account.

“Guard with jealous attention the public liberty.”

”These are the times that try men’s souls.”

”.... the man who never looks into a newspaper is better informed than he who reads them”

”Tyranny, like hell, is not easily conquered; yet we have this consolation with us, that the harder the conflict, the more glorious the triumph.”

“Enlightened statesmen will not always be at the helm.“

“There is danger from all men. The only maxim of a free government ought to be to trust no man living with power to endanger the public liberty“

”Those who expect to reap the blessings of freedom must, like men, undergo the fatigue of supporting it.”

“But a Constitution of Government once changed from Freedom, can never be restored. Liberty, once lost, is lost forever.“

”When angry, count ten, before you speak; if very angry, a hundred”

“… guard against the impostures of pretended patriotism“