Nationalize AI - Part 4 - Inefficient

09 Dec 2024 Reading time: 3 minutesThis is Part 4 in a multipart series on nationalizing AI:

- Nationalize AI - Part 1 - Defining The Problem

- Nationalize AI - Part 2 - Labor

- Nationalize AI - Part 3 - Corporate Power

- Nationalize AI - Part 5 - Superiority

- Nationalize AI - Part 6 - Conclusion

Inefficient

Overall, the details here on how AI systems work are hand-wavy and explicitly high-level. In reality, it’s a minor point, but worth keeping in mind as I make generalizations here.

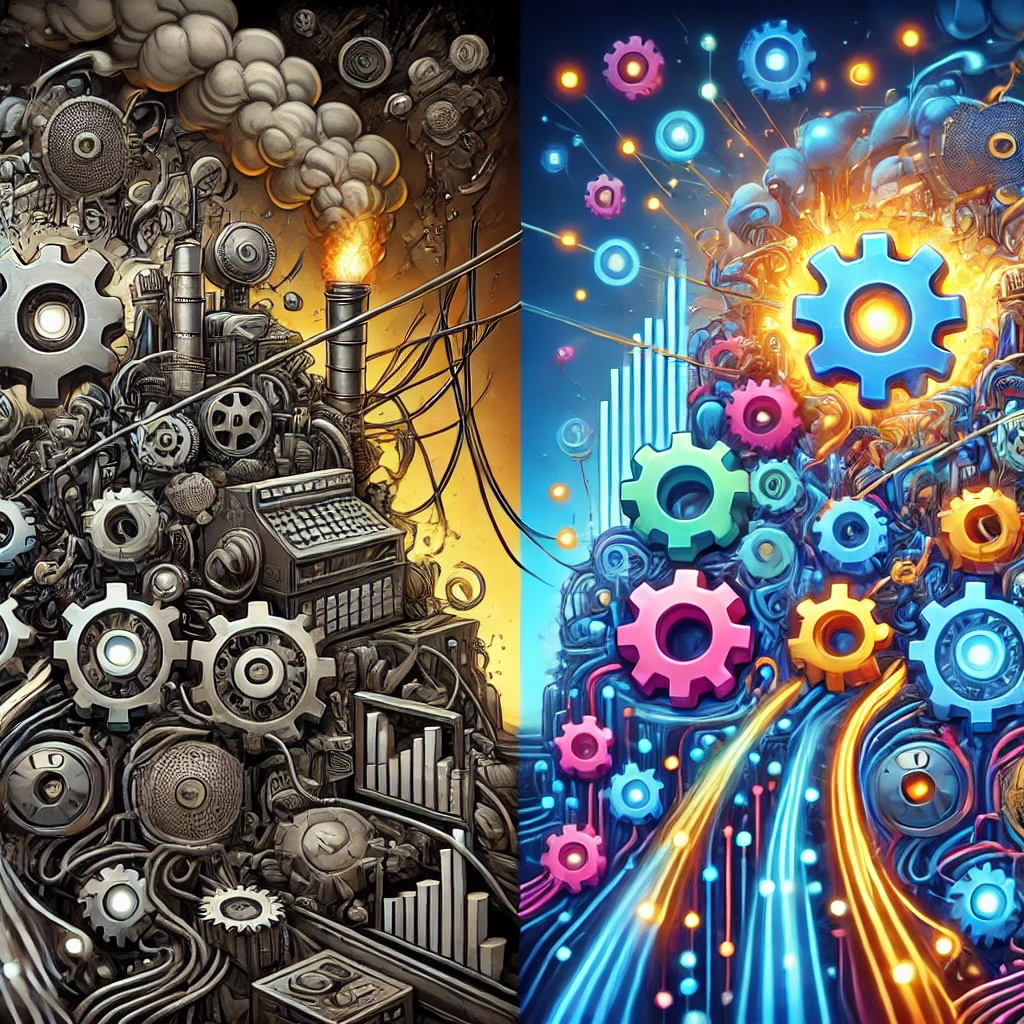

Markets, generally, are an excellent mechanism for allocating resources and driving innovation through competition. However, in this article, I argue that the unique characteristics and risks of AI make it an exception where market forces fall short of ensuring efficiency, equity, and safety.

The Current Landscape & Its Inefficiencies

There are really not many companies competing in building these very large systems, and much of their effort is duplicated. The models primarily use the same or similar datasets, techniques, and algorithms.

While duplication of effort is common across industries, in AI, it poses unique challenges:

Resource Intensity

Developing state-of-the-art AI models requires enormous computational resources, energy, and capital. This duplication wastes resources that could be used elsewhere. Not only could these resources be used in novel AI development, but they could also benefit other industries—or their conservation could help maintain reasonable pricing for others.

Not only this, the amount of resources required for each firm with an AI system is going up and accelerating very fast. This is especially true of energy (and therefore money), tomorrow’s AI systems will require massive upgrades to energy generation and likely the grid infrastructure. This is to say, while the duplicated resources are already incredibly intense - it will only get vastly worse.

Limited Competition

The high cost of developing these systems creates significant barriers for smaller players, consolidating control among a few major corporations.

Additionally, with similar datasets and techniques, most AI systems do not offer substantially different capabilities, meaning the duplication does little to advance the field.

Missed Opportunities for Collaboration

Instead of pooling efforts to address broader societal challenges, companies compete to slightly outpace each other with similar technologies.

You can see the rat race by simply looking up the AI benchmarks leader boards. Each company essentially strives only for marginal percentage improvements over the others in these benchmarks, without attempting to solve real world issues.

Why Nationalization Solves For This

Due to the unprecedented nature of AI—especially the high costs of developing these systems—and the lackluster competition in the industry, I contend that the current state of AI development is clearly failing to optimize AI for the public good. This represents a misallocation of resources that requires some form of government intervention.

A clearly defined and transparent public AI program removes a significant amount of the unnecessary massive duplication of resources. It also ensures that the developments and research is pointed in directions that are more aligned with solving massive societal issues that AI can and therefore should be helping to fix.

I’ve made the following point on each of the articles in the series so far - because I believe it to be true on each, and this one is no different; I believe these issues alone demonstrate the necessity of nationalization.

In the next article in the series, we will talk about why it’s important to be the first to control super intelligent AI: Nationalize AI - Part 5 - Superiority